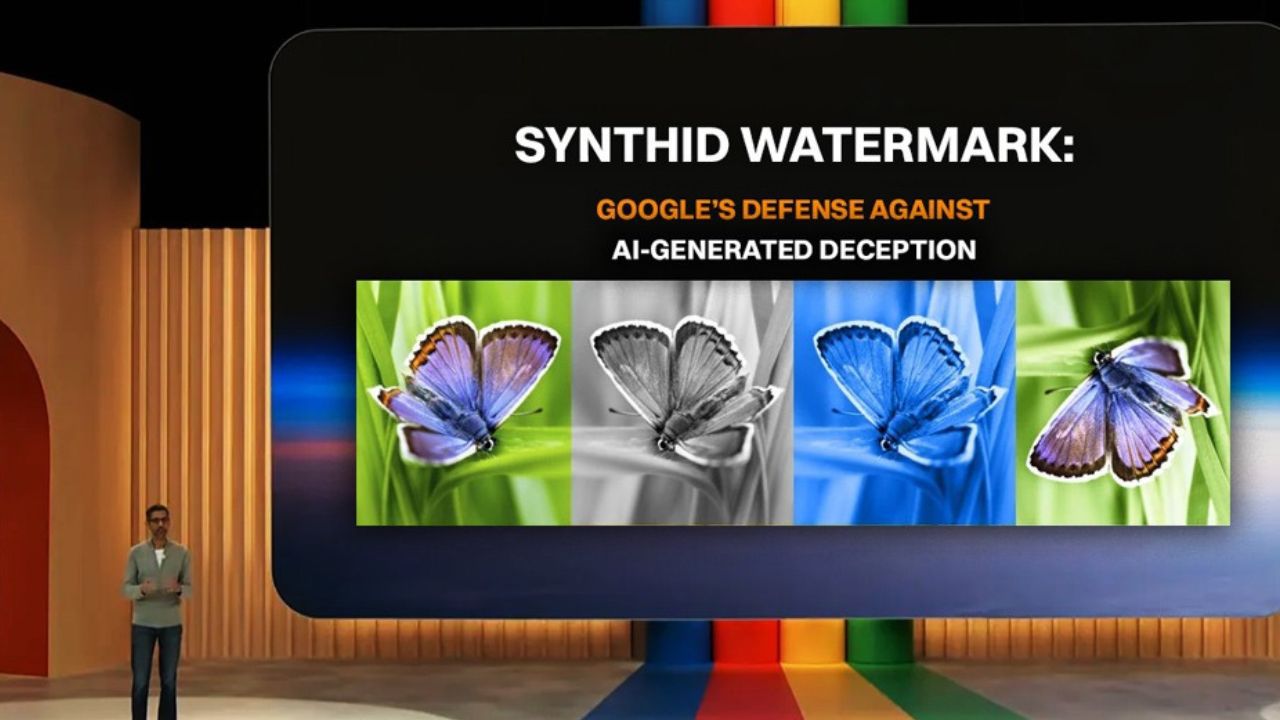

Google introduced the SynthID Detector, a new tool designed to identify content generated by its AI models at the recently concluded Google I/O 2025 developer conference. Developed by Google DeepMind, this verification portal aims to enhance transparency in the rapidly evolving landscape of generative media.

SynthID Detector: How it Works

The SynthID Detector scans images, audio, video, or text for embedded watermarks known as SynthID. These watermarks are imperceptible to humans but can be detected by the tool to determine if the content was created using Google's AI models such as Gemini, Imagen, Lyria, and Veo. When users upload media to the portal, it highlights specific segments that are likely to contain these watermarks, providing a granular analysis of the content's origin.

SynthID's Initial Rollout

Currently, Google is rolling out the SynthID Detector to early testers, with plans to expand access through a waitlist system. Insights gathered from these initial users will inform further enhancements to the tool, aiming to foster responsible AI use and increase visibility around synthetic media.

SynthID Detector can quickly identify SynthID watermarks in audio and images – even after edits like cropping and adding filters. ✂️

— Google DeepMind (@GoogleDeepMind) May 20, 2025

The tool can even show where in the content it thinks AI has been used. ????

Soon, you'll be able to detect AI-generated content in text and video… pic.twitter.com/hGHmsbOWIU

SynthID's AI Transparency & Implications

The introduction of the SynthID Detector represents a significant step toward addressing the challenges posed by AI-generated content. By providing a means to verify the authenticity of digital media, Google aims to mitigate risks associated with misinformation and unauthorised use of AI tools. While the effectiveness and adoption of the SynthID Detector remain to be seen, it demonstrates the value of transparency and accountability in the age of generative AI.