At the heart of the highly anticipated WWDC 2025, Apple didn’t just update its AI strategy—it tore up the old script and drafted a new vision. Gone are the promises made last year; instead, Apple unveiled a more calculated and user-focused evolution of AI, one rooted in real-world application and not just spectacle. This year’s keynote marked a bold transition from ambition to integration, weaving Apple Intelligence deep into the everyday experience of its users.

While there were no fireworks or jaw-dropping stunts, the company quietly dropped a series of powerful AI-driven features that spoke louder than any applause. From context-aware smart tools to on-device machine learning enhancements, every innovation signalled Apple’s deeper commitment to practical AI innovation—aimed at enhancing user experience, boosting device intelligence, and maintaining its focus on privacy-first design.

Through Apple’s Eyes: Visual Intelligence Debuts

Among the standout introductions was Visual Intelligence, a new image recognition feature that blends seamlessly into the iPhone experience. This AI-driven tool allows users to extract information from their surroundings—like identifying a plant, recognising clothing, or getting details about a nearby restaurant. It now goes a step further, integrating with your screen activity to analyse visuals from apps such as social media. With the help of services like Google Search, ChatGPT, and others, users can instantly search for related images based on what they see, making the iPhone a real-time window into contextual knowledge.

Also Read: NVIDIA to Build Europe’s First Industrial AI Cloud to Power Future Factories

ChatGPT Joins Apple’s Image Playground

In a notable collaboration, Apple has brought OpenAI’s ChatGPT into its Image Playground, enhancing its AI image generation capabilities. Users can now create visuals in new styles, including anime, oil painting, and watercolour.

Meet Your Workout Buddy

Apple also introduced Workout Buddy, an AI-powered coach embedded within the Workout app. Using a text-to-speech model, the assistant delivers real-time motivation during your exercise sessions. Whether you're pushing for your fastest mile or tracking your heart rate, the AI acts like a digital personal trainer—summarising your performance once the session ends and cheering you on throughout.

Breaking Language Barriers

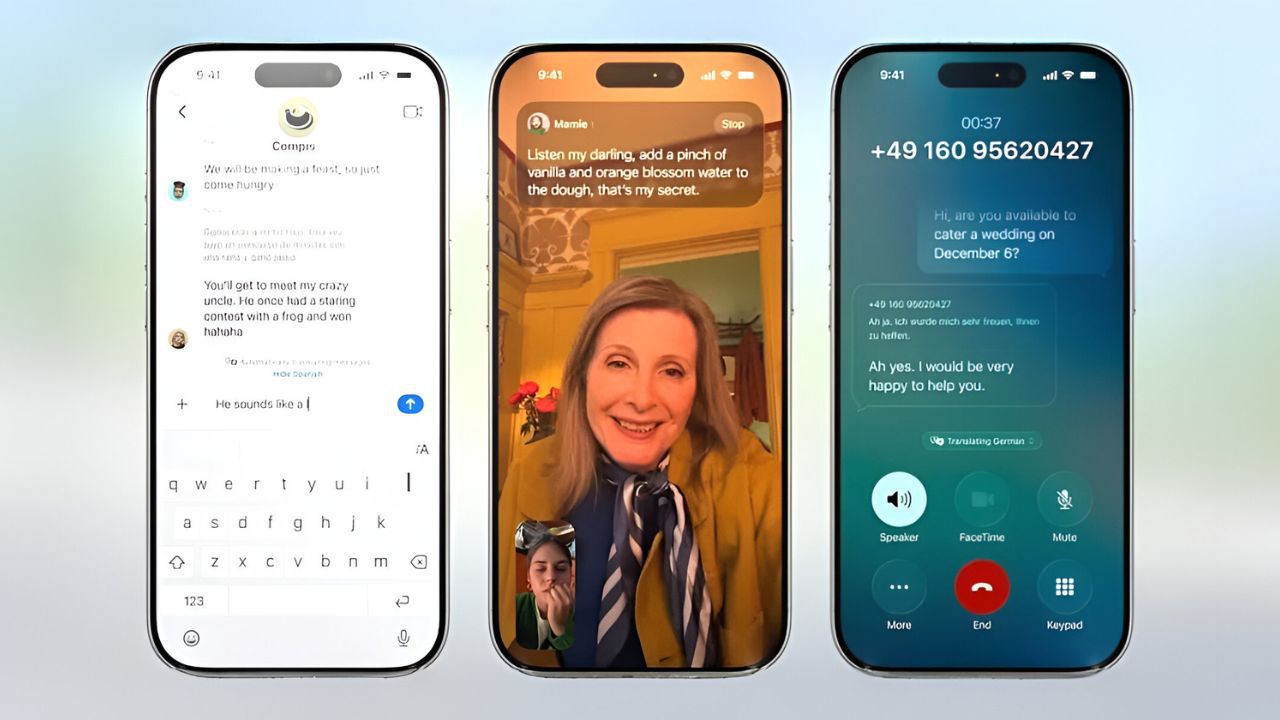

A major leap for global users comes in the form of live translation. Apple Intelligence now supports automatic real-time translation during Messages, FaceTime, and even standard phone calls. On FaceTime, users can view live captions in their preferred language, while during calls, spoken translations are read aloud, allowing for seamless multilingual conversations.

Also Read: Delivery Bots Just Got Smarter—Thanks to $80M Boost and OpenAI Partnership

Smarter Call Handling

To make phone interactions more intelligent, Apple rolled out two new features. Call Screening answers unknown calls in the background and informs you about the caller’s identity and purpose. Meanwhile, Hold Assist detects hold music and offers to keep you connected while you multitask. Once a real person is available, you’ll receive a notification to rejoin the call.

AI in Group Chat: Poll Suggestions in msgs

In group messages, Apple Intelligence now detects indecisive conversations and suggests creating polls to help users decide—like picking a place to eat or choosing a movie. This context-aware addition adds a collaborative dynamic to everyday messaging.

Shortcuts Just Got Smarter

Apple is adding AI capabilities to its Shortcuts app, enabling users to integrate tasks like summarising text or automating daily routines using selected AI models. This makes the app significantly more powerful and user-friendly, especially for power users looking to streamline workflows.

Click to read about: The Future of AI Is Tiny? This Startup Just Raised $215 Million

Spotlight got Smarter

Apple’s Spotlight feature has been subtly upgraded with contextual awareness. Leveraging Apple Intelligence, it now understands user behaviour and can offer action suggestions tailored to current activities, improving the relevance of search results on Mac devices.

Offline AI for Developers

In a developer-friendly move, Apple launched the Foundation Models framework, allowing access to its AI models even offline. This empowers third-party developers to integrate intelligent features within their apps while operating on Apple’s secure on-device infrastructure. The initiative is expected to catalyse a new wave of innovation within the Apple ecosystem.

Also Read: Xbox Goes Portable with Ally – Here’s What We Know

Siri Left Behind—for Now

One of the biggest letdowns of WWDC 2025 was the absence of significant updates to Siri. Despite earlier promises, Apple’s virtual assistant did not receive its anticipated AI-powered overhaul. Senior VP Craig Federighi acknowledged the delay, stating that the upgrades will arrive next year. This postponement could spark concerns about Apple’s competitive stance in the fast-moving AI assistant landscape, especially as rivals continue to advance.

Though WWDC 2025 lacked the show-stopping AI announcements some expected, Apple made it clear that its AI vision is rooted in thoughtful integration rather than spectacle. With tools designed to be genuinely helpful—from workout coaching to real-time translation—the company is slowly weaving intelligence into the fabric of its devices. Siri may have taken a backseat this year, but Apple Intelligence is clearly steering the wheel for the future.